Written by

Eloisa Mae

Reviewed by

Henry Dornier

Published on

Oct 29, 2025

Call center agent scorecards are the key to showing you how to replicate success across your entire team. Here's what you need to know about building scorecards that work.

What is a call center agent scorecard?

A call center agent's scorecard tracks how well sales reps perform during customer calls.

Scorecards define what effective selling looks like in your contact center. They grade each rep against those standards.

What you track:

Hard metrics: conversion rates, talk time, appointments set

Selling skills: Objection handling, value communication, closing technique

Scorecards turn feedback like "improve your pitch" into concrete data. You show reps they're scoring 6/10 on objection handling but 9/10 on opening. That specificity makes coaching stick.

You might run the same training for everyone. You hire similar profiles. Yet performance gaps persist. Your sales floor has a handful of reps crushing quota while the rest hover at 60% attainment. That’s why you need the scorecards.

Agent scorecards reveal exactly what top performers do differently. They measure the behaviors that drive conversions. They track which skills separate closers from callers.

Sales leaders use scorecards to:

Spot coaching opportunities before they become quota misses

Maintain consistency across shifts and team leads

Connect rep behavior to revenue outcomes

Create fair, data-driven performance reviews

The best scorecards reveal what drives sales and what changes will boost conversions.

How scorecards work

Call center quality monitoring scorecards turn sales calls into measurable data. They split interactions into categories, weigh each one, and calculate a total score.

Standard evaluation categories include:

Sales process adherence: Script following, qualification questions, closing attempts

Selling skills: Value articulation, objection handling, urgency creation, rapport-building

Product knowledge: Feature explanations, competitive positioning, use case matching

Efficiency metrics: Talk time, conversion rate, appointments set, deals closed

Each category gets a weight based on revenue impact. Home improvement centers might weigh appointment-setting at 40% since appointments fill their pipeline. A Business-to-Consumer (B2C) sales center might target close rate and deal size instead.

Scoring happens through:

Manual evaluation (sales managers review recorded calls)

Automated scoring (AI analyzes pitch delivery, keywords, objection patterns)

Hybrid approach (AI flags calls for manager review)

Most scorecards use a 1-10 or 1-5 scale per criterion. The system multiplies each score by its weight. It sums the results. It generates a performance score.

Benefits of call center scorecards

Scorecards solve the gap between knowing you need better performance and knowing how to get it. Let's look at what scorecards deliver for sales teams:

Scorecards drive consistent sales execution

Scorecards define your sales process once. Every rep, on every shift, executes the same proven approach. You stop relying on individual managers to decide what "good selling" means.

Conversion rates go up because every prospect hears the same strong pitch, regardless of who answers.

Sales managers skip subjective coaching

Managers grade against specific, documented criteria instead of gut feel. Two managers reviewing the same call reach the same conclusion. This objectivity protects against bias and creates trust in the evaluation process.

Team leaders gain coaching precision

Vague feedback disappears. Instead of "handle objections better," managers say, "You scored 5/10 on price objections. Listen to the call from Tuesday at 2:14 PM where you skipped our value reframe." Coaches spend less time guessing what went wrong and more time fixing it.

Reps receive clear performance visibility

Reps know exactly what you're measuring and how you're scoring it. Performance reviews feel less arbitrary. They can track their own progress. They understand why they received specific scores. They see concrete proof that improvement efforts translate to higher earnings.

Operations connects behavior to revenue

Scorecards link rep actions to business outcomes. You see how specific behaviors drive results. Using customer success stories leads to higher close rates. Leadership spots where skills drive revenue and where training pays off.

8 call center scorecard metrics that drive sales

Here's what to track when building your sales scorecard:

Quantitative metrics

Hard metrics show if your reps convert prospects or waste leads without results.

Prospect-to-customer conversion rate

This is your north star metric. Track it by call type (warm leads vs. cold calls), by product category, and by rep.

Low conversion rates point to one of two problems: Your reps lack the right skills, or your leads are of low quality.

Talk time per interaction

Shorter isn't better. Top performers often spend more time building rapport and handling all objections. Compare talk time against conversion rate.

Reps with low talk time and low conversion are rushing. Reps with high talk time and low conversion are spinning wheels.

Appointment booking rate

For consultative sales with multi-step processes, the appointment-setting rate predicts future revenue.

Reps book meetings, but are the prospects a no-show? Two possible problems: weak confirmation process or unclear value communication.

Revenue per call measures rep productivity

This combines conversion rate with average deal size. A rep converting at 15% on $500 deals generates less value than a rep converting at 12% on $800 deals.

Track this to identify who's selecting better prospects or upselling with success.

Qualitative metrics

These selling skills explain why two reps with the same talk time get completely different results.

Opening strength

Strong performers use permission-based openers. They build credibility fast. They set clear agendas.

Weak performers jump straight into pitches. They skip building rapport and don't check if the prospect has time.

Objection handling

Top performers acknowledge concerns, ask clarifying questions, and reframe objections into value discussions.

Weak performers hear "not interested" and either ignore it, get defensive, or give up right away.

Value communication

Strong performers connect features to specific customer outcomes. They use relevant examples and success stories.

Weak performers recite product specifications without connecting them to prospect's needs.

Closing technique

Top performers create urgency at the right time. They use assumptive closes. They handle final hesitations with confidence.

Weak performers either push too hard (destroying trust) or fail to ask for commitment at all.

Quantitative vs. qualitative metrics: A quick comparison

Both types of metrics matter, but they solve different problems. One shows the gap, the other shows how to close it.

Metric type | Example metrics | Pros | Cons | Best fit use cases |

|---|---|---|---|---|

Quantitative | Conversion rate, talk time, appointments set, revenue per call | Objective, simple to track, tied to revenue | Misses why reps succeed or fail, can incentivize wrong behaviors | High-volume transactional sales, performance trending, commission calculation |

Qualitative | Opening strength, objection handling, value communication, closing technique | Reveals coachable skill gaps, predicts future performance improvement | Requires manager evaluation time, introduces some subjectivity | Consultative sales, new rep development, identifying best practices |

Effective performance tracking requires both. Use quantitative metrics to identify who needs coaching and measure results. Use qualitative metrics to see what to coach and which behaviors to copy.

Call center scorecard examples

Let's walk through three scorecard types you can adapt for your sales team:

QA monitoring scorecard

This scorecard evaluates call quality by reviewing recorded sales calls. You can do this through manual review or use automated tools.

Sample structure:

Opening and qualification (15%): Used effective opener, asked qualifying questions, confirmed decision-maker

Value communication (25%): Articulated benefits with clarity, relevant examples, connected to prospect needs

Objection handling (30%): Acknowledged concerns, asked clarifying questions, reframed the conversation

Closing (25%): Created appropriate urgency, asked for commitment, handled final hesitations

Process adherence (5%): Followed script framework, documented in CRM, scheduled follow-up

Scoring: Each criterion receives a 1-5 rating. Multiply by weights. Sum for total score. A rep scoring 4 on objection handling (30% weight) but 2 on closing (25% weight) gets a final score of 3.2/5.

Call center KPI scorecard

This scorecard tracks performance through metrics pulled from your dialer and CRM.

Sample metrics:

Conversion rate: Target ≥ 12% (40% weight)

Talk time: Target 8-12 minutes (15% weight)

Appointments set: Target ≥ 5 daily (25% weight)

Revenue per call: Target ≥ $45 (15% weight)

Follow-up completion: Target ≥ 90% (5% weight)

Scoring: Reps receive points based on how close they land to targets. Meeting all targets = 100 points. Missing conversion target by 3 points might deduct 20 points from final score.

Hybrid scorecard (quantitative + qualitative)

This approach combines automated KPI tracking with manual quality evaluation for complete visibility.

Sample structure:

Quantitative section (50% weight): Conversion rate, talk time, appointments set, revenue per call

Qualitative section (50% weight): Opening, value communication, objection handling, closing

Your system captures metrics from every call on autopilot. Sales managers score 3-5 sample calls per rep each week for quality criteria by hand. Both scores combine into one performance rating.

High-impact use cases for call center scorecards

Generic scorecards measure everything but fix nothing. Here's where focused scorecards actually move the needle on sales performance:

Tracking selling skills that drive conversions

Product knowledge is table stakes. Selling skills separate quota-hitters from chronic underperformers.

Scorecards quantify subjective elements like rapport-building, objection handling, and urgency creation. You can grade whether reps:

Build credibility in the opening 30 seconds

Ask questions that uncover buying motivation

Use stories and examples that resonate with specific prospect types

Create appropriate urgency without damaging trust

Two reps pitch the same product to similar prospects. One converts at 40%, the other at 15%. Selling skill makes the difference. Measure it to know who to coach and who should train others.

Identifying coaching opportunities before they become quota misses

Waiting until the end of the quarter to fix performance problems means months of lost sales. Your reps miss out on commission and your team misses revenue targets.

Scorecards spot trends early:

A rep's closing scores drop over two weeks? Burnout or increased objections might be hitting them.

Many reps score low on the same thing? Your training failed to stick or market conditions shifted.

Top performers share specific behaviors others don't? You found best practices to scale across your team.

Set up alerts that trigger when performance drops. Say a rep's weekly average falls 10% or they score below 6/10 two weeks in a row. Their manager gets an alert with specific call examples and coaching tips on what to fix.

Aligning rep performance with revenue goals

Your company wants to grow revenue by 25% this quarter. Which rep behaviors will drive that growth?

Scorecards create the connection between individual actions and business outcomes. You can correlate:

Which selling skills predict high conversion rates

Whether faster talk time improves or hurts deal size

How objection handling quality relates to close rates

Which opening techniques book more qualified appointments

Your analysis shows reps who score 8+ on "using customer success stories" convert at 18%. Reps scoring 5-7 convert at 12% with the same products and pricing. Now you know what to coach.

Benchmarking performance across teams and time

Scorecards let you compare performance across:

Individuals (who's crushing quota, who needs support)

Teams (which shift or manager generates better results)

Time periods (are we improving month-over-month)

Products (which offerings close easiest)

This visibility reveals where to invest resources. Team A outperforms Team B despite similar lead quality and comp plans.

Team A's manager is doing something worth replicating. Performance drops every Friday afternoon. You might have a fatigue or scheduling issue affecting close rates.

Criteria for choosing the right scorecard approach

The wrong scorecard system creates more work than value. Choose based on these factors before committing:

Scalability

Your scorecard needs to work whether you're evaluating 10 reps or 500. Manual evaluation works for small teams but becomes impossible at scale.

How many calls can your managers review? You have 100 reps taking 80 calls daily. That's 8,000 interactions per day. Even reviewing 5% means evaluating 400 calls daily.

Can your scoring system handle growth? Adding reps, products, or locations should take minimal process changes. Spreadsheet-based scorecards break down fast. Look for platforms that automate score collection and reporting.

Integrations with dialers and CRM systems

Standalone scorecards create manual work. Your scoring system should connect to:

Dialer platforms so managers can review calls without downloading files or switching systems

CRM integration that connects performance scores to prospect data, deal stages, and conversions

Workforce management tools connect performance scores to scheduling, training, and capacity planning

Commission systems to tie scorecard results to compensation calculations

Cost and complexity

Budget matters. So does implementation time and ongoing maintenance.

Initial investment includes:

Software licensing or platform fees

Integration and setup costs

Training for managers and team leads

Time to build evaluation criteria and weights

Ongoing costs include:

Subscription or usage-based pricing

Manager time for manual evaluation

Calibration sessions and quality checks

System updates and maintenance

Start with a pilot program before committing to enterprise-wide rollout. Test your scorecard approach with one team for 4-6 weeks. Measure the impact on conversion rates and quota attainment. Scale what works.

Customization capabilities

No two sales floors operate the same. Your scorecard should adapt to your specific needs.

Evaluation criteria should reflect what drives conversions in your market and product category.

Weighting schemes should align with business priorities.

Scoring scales should match how your team thinks about performance: 1-5, 1-10, or pass/fail.

Reporting views show each role what they need. Reps see personal scores. Directors see team trends and pipeline impact.

Off-the-shelf scorecards rarely fit your needs. Can you change evaluation forms without vendor help? Can you add custom metrics specific to your products or objection patterns? Can you create different scorecards for different product lines or sales motions?

Implementation strategy: Rolling out scorecards that work

Here's how to deploy your customer service qa scorecard without triggering resistance or confusion:

Start with a pilot program

Don't launch scorecards across your entire sales floor on day one. Pick one team (10-15 reps) with a supportive manager. Run a 4-6 week pilot.

What to test:

Do your evaluation criteria measure what drives conversions?

Can managers complete evaluations in reasonable time?

Do reps understand their scores and how to improve?

Does the data reveal actionable coaching opportunities?

Success metrics:

Manager completion rate (are they finishing scorecards on schedule?)

Rep engagement (are they reviewing scores and asking questions?)

Performance improvement (are conversion rates trending up after coaching?)

Time investment (is the juice worth the squeeze?)

Gather feedback from all stakeholders: reps, managers, team leads. Find what confuses them, what works, and what criteria need changes. Fix those issues before scaling to other teams.

Calibrate managers to remove scoring drift

Many managers evaluating calls means many interpretations of what "good selling" looks like. Without calibration, one manager's "8" becomes another's "6."

Run monthly calibration sessions:

All managers score the same 3-5 calls on their own

Compare scores and discuss discrepancies

Agree on specific examples of each rating level

Document decisions to maintain consistency

Create clear scoring rubrics. Instead of "rate objection handling 1-5," define what each level means:

5: Acknowledges concern, asks clarifying questions, reframes into value discussion, closes with success

4: Acknowledges concern, provides reasonable response, maintains momentum

3: Addresses objection but doesn't resolve prospect's concern in full

2: Defensive response or gives up too fast

1: Ignores objection or becomes argumentative

Build human oversight into automated systems

AI-powered scorecards can analyze every single call you make. But they miss the selling context that only humans can catch.

Set up escalation rules:

Scores that deviate from rep's average by a wide margin trigger manager review

High-value deals or strategic accounts get manual verification

Unusual prospect objections get spot-checked

First instance of any new objection type gets manager evaluation

Create feedback loops: When managers disagree with AI scoring, document why. Feed that information back to improve the algorithm. This continuous refinement makes automated scoring more accurate over time.

Avoid common pitfalls that sabotage adoption

Measuring too much: Scorecards with 20+ criteria overwhelm managers and reps. Nobody can focus on improving 20 things at once. Start with 5-8 core criteria. Nail those. Then expand.

Scoring without coaching: Scorecard data needs to drive actual coaching conversations. Every evaluation should connect to specific skill development.

Punitive approach: Scorecards that only appear during PIP discussions create fear and resistance. Frame scoring as development tool. Share both wins and improvement areas.

Ignoring rep input: Reps know which criteria feel fair and which feel arbitrary. Ask them to review evaluation forms before launch. When they help design the system, they're more likely to trust and engage with it.`

Inconsistent frequency: Score 5 calls one week, then skip three weeks? You get no useful data. Pick a schedule like 3 evaluations per rep per week and stick to it.

No follow-up on scores: High scores need recognition. Low scores need coaching. Skip either one and you kill motivation. Celebrate improvements in public. Provide specific coaching in private. Tie scorecard performance to commission bonuses when it makes sense.

What tools help track call center scorecard metrics?

Here's what's available across different budget and complexity levels.

Tool type | Examples | Pros | Cons | Best for |

|---|---|---|---|---|

Spreadsheets | Excel, Google Sheets | Free, flexible, complete control | Doesn't scale, manual data entry, prone to errors | Teams under 15 reps testing scorecard criteria |

QA Platforms | Five9 Quality Management, NICE Quality Central, Calabrio | Standardized forms, manager calibration, automated reporting | Requires integration, licensing costs, IT support | Established sales centers with dedicated coaching teams |

CRM Integrations | Salesforce, Five9, Genesys built-in modules | Data flows on autopilot, unified reporting, no new system to learn | Limited QA features, requires platform expertise | Organizations wanting basic scorecard functionality in existing systems |

Build smarter call center scorecards to drive quota attainment

Call center agent scorecards measure what separates top performers from underperformers. They track the specific behaviors and metrics that drive conversions.

Start with 5-8 core criteria that match your revenue goals. Mix hard numbers with selling skills.

Hard numbers include conversion rate, talk time, and appointments set.

Selling skills include objection handling, value communication, and closing technique.

Weight each one based on what improves conversions in your business. Forget what sounds impressive in leadership meetings.

Test it with one team for 4-6 weeks first. Get your managers calibrated each month so scores stay consistent. Then use those scores to spark real coaching conversations. Not another box to check on performance reviews.

Coach what actually closes deals

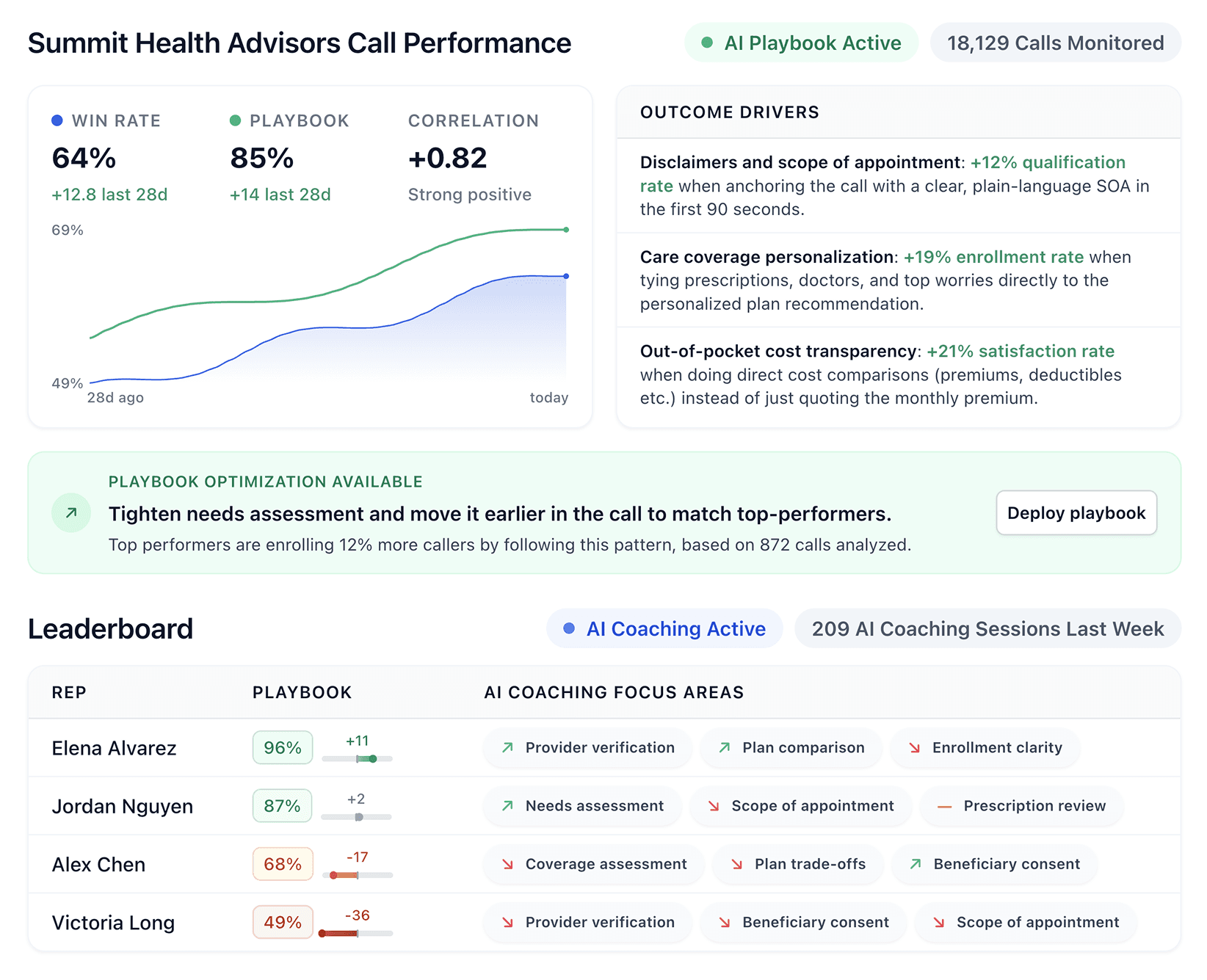

Traditional call center agent scorecards track what you think matters. Alpharun tracks what actually works.

Your top rep closes at 22% while everyone else hovers at 12%. You know they're doing something different. But what? You can't tell what happens in those critical moments when deals close or collapse.

Alpharun analyzes every single call to find the exact behaviors that separate closers from callers. It catches the objection-handling techniques your best reps use. The specific phrases that build urgency without destroying trust. The timing patterns that turn hesitant prospects into buyers.

Then it teaches those same moves to your entire team. Your average performers start closing like your top 10% used to.

That's not guesswork. That's your own playbook, scaled across everyone. See how Alpharun turns your best reps' instincts into your entire team's playbook.

Contact Alpharun to get a demo. Plus, Alpharun helps with high-volume hiring, too.

Frequently asked questions

How do you create a call center QA scorecard?

You create a call center QA scorecard by identifying 5-8 criteria that drive conversions. Weight them by revenue impact. Build a scoring rubric that defines each level.

Pick quantitative metrics like conversion rate and appointments set. Add qualitative factors like objection handling and closing technique. In competitive markets, objection handling might get 30% weight.

Define what each rating level means in your rubric. Pilot the scorecard with one team first. Calibrate your managers monthly by having them score the same calls together.

How often should you update a call center agent's scorecard?

The traditional answer: You should update a call center agent's scorecard quarterly. Most companies update scorecards every three months based on market conditions, new products, or changing objectives. They tell you to make minor adjustments when you spot issues.

But that's the old way.

With AlphaRun, you're not waiting 90 days to optimize. You're updating continuously based on real data from 100% of your calls.

Here's how the cycle works:

AlphaRun scores every single call (not just samples).

It identifies patterns between high-scoring calls and actual revenue.

Your playbook updates automatically to reinforce what's actually working.

Instead of quarterly guesswork, you get a living scorecard that evolves with your team's performance. When a rep discovers a technique that converts, it gets surfaced and scaled immediately, not three months later.

You still need to recalibrate managers after significant updates and communicate changes to reps. But now those changes happen when they matter, not on an arbitrary calendar schedule.

What's the difference between QA monitoring and a scorecard?

The difference between QA monitoring and a scorecard is that monitoring only reviews sales calls. A scorecard is the tool you use to evaluate those calls. QA monitoring defines what to review, how often, and who evaluates. The scorecard defines the criteria, weights, and ratings you apply.

You need both.

Can scorecards be customized for inbound vs. outbound calls?

Yes, you can customize scorecards for inbound vs. outbound calls. You need different scorecards for inbound and outbound sales because they measure different success criteria.

Inbound scorecards focus on qualification and needs discovery since prospects reached out first. Outbound scorecards focus on strong openers and objection handling since you're interrupting prospects.

Base your criteria on what each sales motion requires to succeed.

What are common mistakes in call center scorecard design?

The biggest mistakes in scorecard design are:

Measuring too many things

Using vague definitions

Treating everything as equal

When you measure 15+ criteria, you overwhelm your reps. Vague rubrics lead to inconsistent scoring. Equal weighting ignores what actually drives revenue.

Other common pitfalls include:

Evaluating calls on an inconsistent schedule

Collecting data but never acting on it

Copying competitor scorecards without adapting them to your business

Ignoring feedback from your reps

Using scorecards only for discipline instead of development